On this page

Fair Trials’ tool shows how ‘predictive policing' discriminates and unjustly criminalises people

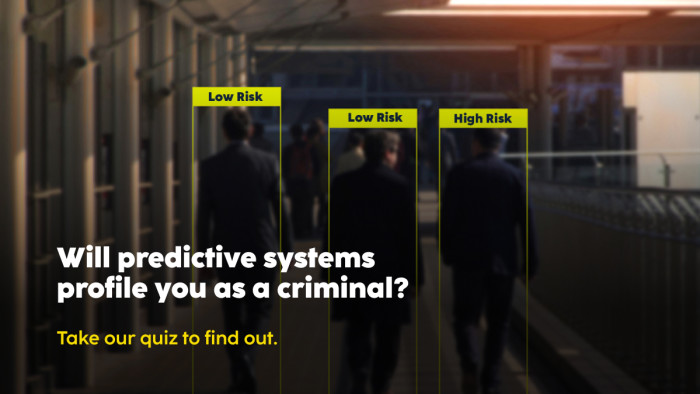

Today, Fair Trials has launched an interactive online tool designed to show how the police and other criminal justice authorities are using predictive systems to profile people and areas as criminal, even before alleged crimes have occurred.

Our research has shown that more and more police forces and criminal justice authorities across Europe are using automated and data-driven systems, including artificial intelligence (AI), to profile people and try and ‘predict their ‘risk’ of committing a crime in the future, as well as profile areas to ‘predict’ whether crime will occur there in future.

These systems are created and operated using discriminatory data, reinforcing structural and institutional inequalities across society and leading to unjust and racist outcomes. These predictions can lead to surveillance, questioning and home raids, and can influence pre-trial detention and sentencing decisions.

There is growing opposition to predictive policing and justice systems across Europe, with many organisations and some Members of the European Parliament (MEPs) supporting a ban.

Fair Trials is calling on MEPs to ban predictive systems when they vote on the Artificial Intelligence Act in the upcoming months.

We hope that our example predictive policing and justice tool will raise awareness of the discriminatory outcomes generated by these systems. Each question in our predictive tool directly relates to real data used by police and criminal justice authorities across Europe to profile people and places. Using information about someone’s school attendance, family circumstances, ethnicity and finances to decide if they could be a criminal is fundamentally discriminatory. The only way to protect people and their rights across Europe is to ban these systems.

About Fair Trials’ predictive policing tool

The questions in our interactive tool directly match information that is actively used by law enforcement and criminal justice authorities in their own predictive and profiling systems and databases.

In real life, if a person fits just a few of the pieces of information (as asked by our questions), it can be enough to be marked as a ‘risk’. Likewise, if data about an area or neighbourhood fits a similar profile, it can be marked as a place where crime will occur in future.

“Imagine waking up one day with the police barging into your house after AI has flagged you as a suspect. Then it’s up to you to prove you’re innocent. It is you versus the computer. This is dangerous, intrusive and disproportionate. The myth that a calculation is more ethical than a human can destroy people’s lives. Predictive policing erodes fundamental principles of our democratic societies, such as the presumption of innocence, our right to privacy and the rule of law.”

Predictive policing and justice systems

Predictive systems have been shown to use discriminatory and flawed data and profiles to make these assessments and predictions. They try to determine someone’s risk of criminality or predict the locations of crime based on the following information:

- Education

- Family life and background

- Neighbourhoods and where people live

- Access or engagement with certain public services, like welfare, housing and/or healthcare

- Race and/or ethnicity

- Nationality

- Credit scores, credit rating or other financial information

- ‘Contact’ with the police – whether as a victim or witness to a crime, or as a suspect, even if not charged or convicted

It is crucial to acknowledge that elements of structural injustice are intensified by AI systems and we must therefore ban the use of predictive systems in law enforcement and criminal justice once and for all. Any AI or automated systems that are deployed by law enforcement and criminal justice authorities to make behavioural predictions on individuals or groups to identify areas and people likely to commit a crime based on historical data, past behaviour or an affiliation to a particular group will inevitably perpetuate and amplify existing discrimination. This will particularly impact people belonging to certain ethnicities or communities due to bias in AI systems.

Read more

Automating Injustice: the use of artificial intelligence and automated decision-making systems in criminal justice in Europe (2021)

AI Act: EU must ban predictive AI systems in policing and criminal justice (2022)

An EU Artificial Intelligence Act for Fundamental Rights: A Civil Society Statement (2022)