On this page

Fair Trials, European Digital Rights (EDRi) and 49 other civil society organisations launched a collective statement to call on the EU to ban predictive policing systems in the Artificial Intelligence Act (AIA).

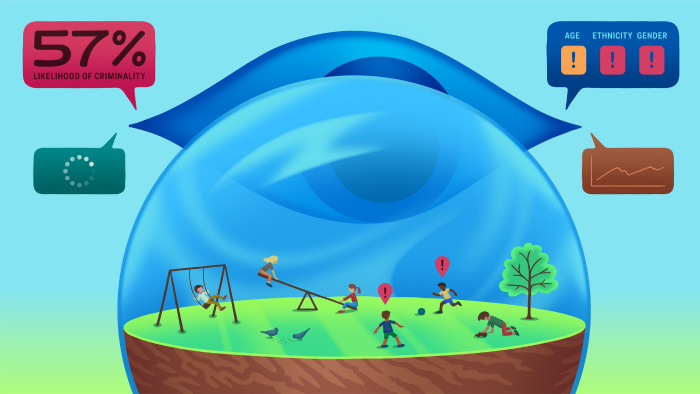

Artificial intelligence (AI) systems are increasingly used by European law enforcement and criminal justice authorities to profile people and areas, predict supposed future criminal behaviour or occurrence of crime, and assess the alleged ‘risk’ of offending or criminality in the future.

However, these systems have been demonstrated to disproportionately target and discriminate against marginalised groups, infringe on liberty, fair trial rights and the presumption of innocence, and reinforce structural discrimination and power imbalances.

Griff Ferris, Legal and Policy Officer, Fair Trials stated:

“Age-old discrimination is being hard-wired into new age technologies in the form of predictive and profiling AI systems used by law enforcement and criminal justice authorities. Seeking to predict people’s future behaviour and punish them for it is completely incompatible with the fundamental right to be presumed innocent until proven guilty. The only way to protect people from these harms and other fundamental rights infringements is to prohibit their use.”

Fundamental harms are already being caused by predictive, profiling and risk assessment AI systems in the EU, including;

Discrimination, surveillance and over-policing

Predictive AI systems reproduce and reinforce discrimination on grounds including but not limited to: racial and ethnic origin, socio-economic and work status, disability, migration status and nationality. The data used to create, train and operate AI systems is often reflective of historical, systemic, institutional and societal discrimination which result in racialised people, communities and geographic areas being over-policed and disproportionately surveilled, questioned, detained and imprisoned.

Infringement of the right to liberty, the right to a fair trial and the presumption of innocence

Individuals, groups and locations are targeted and profiled as criminal. This results in serious criminal justice and civil outcomes and punishments, including deprivations of liberty, before they have carried out the alleged act. The outputs of these systems are not reliable evidence of actual or prospective criminal activity and should never be used as justification for any law enforcement action.

Lack of transparency, accountability and the right to an effective remedy

AI systems used by law enforcement often have technological or commercial barriers that prevent effective and meaningful scrutiny, transparency, and accountability. It is crucial that individuals affected by these systems’ decisions are aware of their use and have clear and effective routes to challenge it.

Sarah Chander, Senior Policy Adviser, EDRi stated:

“AI for predictive policing is reinforcing racial profiling and compromising the rights of over-policed communities. The EU’s AI Act must prevent this with a prohibition on predictive policing systems.”

The signatories call for a full prohibition of predictive and profiling AI systems in law enforcement and criminal justice in the Artificial Intelligence Act. Such systems amount to an unacceptable risk and therefore must be included as a ‘prohibited AI practice’ in Article 5 of the AIA.

Signed by:

- Fair Trials (International)

- European Digital Rights (EDRi) (Europe)

- Access Now (International)

- AlgoRace (Spain)

- AlgoRights (Spain)

- AlgorithmWatch (Europe)

- Amnesty Tech (International)

- Antigone (Italy)

- Big Brother Watch (UK)

- Bits of Freedom (Netherlands)

- Bulgarian Helsinki Committee (Bulgaria)

- Centre for European Constitutional Law – Themistokles and Dimitris Tsatsos Foundation (Europe)

- Citizen D / Državljan D (Slovenia)

- Civil Rights Defenders (Sweden)

- Controle Alt Delete (Netherlands)

- Council of Bars and Law Societies of Europe (CCBE) (Europe)

- Czech League for Human Rights (Czech Republic)

- De Moeder is de Sleutel (Netherlands)

- Digital Fems (Spain)

- Electronic Frontier Norway (Norway)

- European Centre for Not-for-Profit Law (ECNL) (Europe)

- European Criminal Bar Association (ECBA) (Europe)

- European Disability Forum (EDF) (Europe)

- European Network Against Racism (ENAR) (Europe)

- European Sex Workers Alliance (ESWA) (Europe)

- Equinox Initiative for Racial Justice (Europe)

- Equipo de Implementación España Decenio Internacional Personas Afrodescendientes (Spain)

- Eticas Foundation (Europe)

- Fundación Secretariado Gitano (Europe)

- Ghett’Up (France)

- Greek Helsinki Monitor (Greece)

- Hackney Account (UK)

- Helsinki Foundation for Human Rights (Poland)

- Homo Digitalis (Greece)

- Human Rights Watch (International)

- International Committee of Jurists (International)

- Irish Council for Civil Liberties (Ireland)

- Iuridicum Remedium (IuRe) (Czech Republic)

- Liga voor Mensenrechten (Belgium)

- Ligue des Droits Humains (Belgium)

- La Quadrature du Net (France)

- Novact (Spain)

- Observatorio de Derechos Humanos y Empresas en la Mediterránea (ODHE) (Europe)

- Open Society European Policy Institute (Europe)

- Panoptykon Foundation (Poland)

- PICUM (Europe)

- Racial Justice Network (UK)

- Refugee Law Lab (Canada)

- Rights International Spain (Spain)

- SHARE Foundation (Serbia)

- Statewatch (Europe)

- ZARA – Zivilcourage und Anti-Rassismus-Arbeit (Austria)