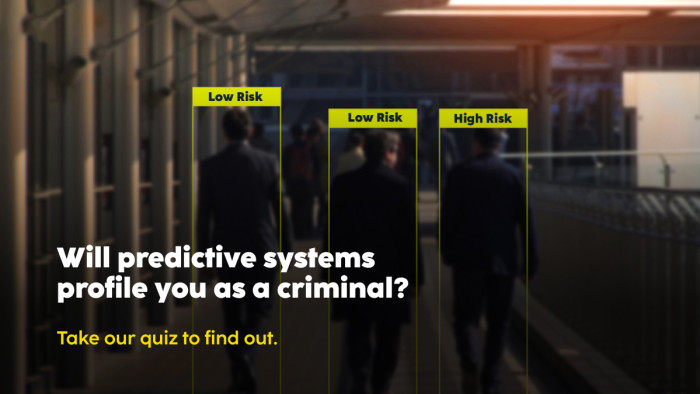

MEPs among those profiled as ‘at risk’ of future criminal behaviour: Fair Trials' tool exposes discriminatory and unjust predictive policing

- Several Members of the European Parliament (MEPs) have been profiled as ‘at risk’ of criminal behaviour along with many members of the public, after using Fair Trials’ example criminal prediction tool.

- The tool mirrors how predictive systems are used in law enforcement and criminal justice in the EU and exposes how these systems make arbitrary conclusions about people’s ‘risk’ of future criminal activity.

- More than 1000 emails were sent to MEPs calling on them to ban these systems in the EU’s Artificial Intelligence Act. Fair Trials and more than 50 other rights and civil society organisations also call on MEPs to ban the use of these systems in Act.

Fair Trials’ predictive tool, launched on 31 January 2023, asks users questions matched directly to information which is actively used by law enforcement and criminal justice authorities in predictive and profiling systems.

MEPs from the Socialists & Democrats, Renew, Greens/EFA and the Left Group in the European Parliament were among those to use Fair Trials’ example criminal prediction tool, to show how harmful, unjust and discriminatory these systems are. After using the online tool, MEPs Karen Melchior, Cornelia Ernst, Tiemo Wölken, Petar Vitanov and Patrick Breyer were all profiled as ‘medium risk’ of committing a crime in future.

In real-life situations where authorities use these systems, the consequences of being profiled in this way could include: being entered onto law enforcement databases and subjected to close monitoring, random questioning, and stop and search; having your risk score shared with your school, employer, health and immigration agencies, child protection services, and others; being denied bail and held in pre-trial detention should you be arrested.

Several other MEPs also shared the tool and expressed their support for banning predictive policing and justice systems, including Birgit Sippel, Kim van Sparrentak, Tineke Strik and Monica Semedo.

Members of the public across the EU also completed the tool, and over 1000 emails were sent via Fair Trials website to MEPs calling for predictive and profiling systems in law enforcement and criminal justice to be banned.

Griff Ferris, Senior Legal and Policy Officer at Fair Trials stated:

“Our interactive predictive tool shows just how unjust and discriminatory these systems are. It might seem unbelievable that law enforcement and criminal justice authorities are making predictions about criminality based on people’s backgrounds, class, ethnicity and associations, but that is the reality of what is happening in the EU.

“There’s widespread evidence that these predictive policing and criminal justice systems lead to injustice, reinforce discrimination and undermine our rights. The only way to protect people and their rights across Europe is to prohibit these criminal prediction and profiling systems, against people and places.”

Petar Vitanov, Member of the European Parliament (S&D, BG) and shadow rapporteur for the AI Act, said:

“I have never thought that we will live in a sci-fi dystopia where machines will ‘predict’ if we are about to commit a crime or not. I grew up in a low-income neighbourhood, in a poor Eastern European country, and the algorithm profiled me as a potential criminal. There should be no place in the EU for such systems – they are unreliable, biased and unfair.”

Karen Melchior, Member of the European Parliament (Renew, DK) and member of the committee on legal affairs (JURI):

“I am not surprised the predictive system profiled me as ‘at risk’ of future criminal behaviour, because it is an automated system that responds to the inputs asked for and received. Automation of judging human character and predicting human behaviour will lead to discrimination and random decisions that will alter people’s lives and opportunities.

“We cannot allowed funds to be misplaced from proper police work and well-funded as well as independent courts to biased and random technology. The promised efficiency will be lost in the clean up after wrong decisions – when we catch them. Worst of all we risk destroying lives of innocent people. The use of predictive policing mechanisms must be banned.”

Despite being known to reproduce and reinforce discrimination, more and more police forces and criminal justice authorities are using these systems to ‘predict’ if someone will commit a crime or reoffend.

People profiled as ‘at risk’ on the basis of this information can face serious consequences, including regular stop and search, questioning and arrest when a crime is committed in their area, and even having their children removed by social services. Areas and neighbourhoods are also subjected to these predictions and profiles, leading to similar outcomes. These predictions and profiles are also used in pre-trial detention and prosecution decisions, as well as in sentencing and probation.

We do not collect or retain any information about you or your answers!