Key EU Parliament votes tomorrow on major AI law including landmark ban on ‘predictive policing’ and criminal ‘prediction’ systems

- MEPs in key European Parliament Committees will vote tomorrow to finalise the EU’s pioneering AI Act, which officials and MEPs have been negotiating for several years

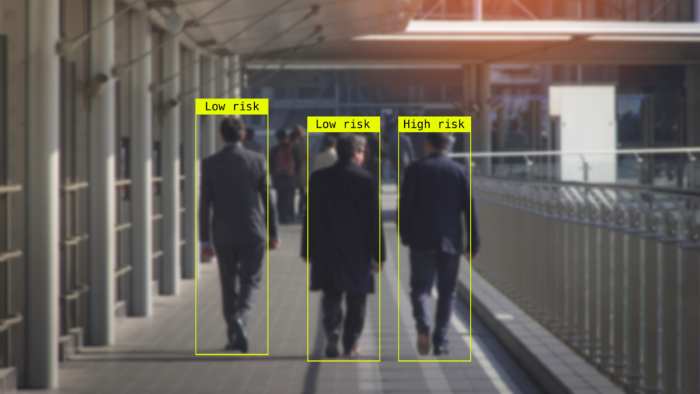

- A ban on ‘predictive policing’ and criminal ‘prediction’ systems has been tabled as part of the amendments to the AI Act, which would be the first of its kind in Europe

- Fair Trials, European Digital Rights, Access Now, Amnesty Tech and Human Rights Watch are among more than 50 groups across Europe calling for a ban, alongside support from MEPs across the European Parliament

Tomorrow, European Parliament MEPs will vote to finalise the text of the EU AI Act, a flagship legislative proposal to regulate AI based on its potential to cause harm.

Among amendments that will be voted on is a prohibition on ‘predictive policing’ and criminal ‘prediction’ systems used by law enforcement and criminal justice authorities in Europe, which would be the first of its kind in Europe.

The use of these systems by law enforcement and criminal justice authorities have been proven to reproduce and reinforce existing discrimination, which already results in Black people, Roma and other minoritised ethnic people being disproportionately stopped and searched, arrested, detained and imprisoned across Europe. Fair Trials has documented numerous systems which can and have resulted in such discrimination, as well as how attempts to ‘predict’ criminal behaviour undermine fundamental rights, including the right to a fair trial and the presumption of innocence.

Fair Trials urges MEPs to protect people and their rights and vote to ban predictive policing and criminal prediction systems in the AI Act.

Griff Ferris, Senior Legal and Policy Officer at Fair Trials, said:

“Time and time again, we’ve seen how the use of these systems exacerbates and reinforces racism and discrimination in policing and the criminal justice system, feeds systemic inequality in society, and ultimately destroys people’s lives.

“We urge MEPs to vote to protect people and their rights by prohibiting these intrusive, harmful systems, as well as ensuring a proper regime of transparency and accountability for AI and other automated systems in high-risk areas.

“Police and criminal justice authorities in Europe must not be allowed to use systems which automate injustice, undermine fundamental rights and result in discriminatory outcomes.”

Widespread support for the prohibition

The prohibition is supported by more than 50 rights, lawyers and other civil society organisations across Europe, including European Digital Rights (EDRi), Access Now, Human Rights Watch, Amnesty Tech, the Council of Bars and Law Societies of Europe and the European Criminal Bar Association, among many others.

Many MEPs have also publicly supported a ban. Co-rapporteur of the AI Act, Dragos Tudorache, has said:

“Predictive policing goes against the presumption of innocence and therefore against European values. We do not want it in Europe.”

The other co-rapporteur of the Act, Brando Benifei MEP said in a LIBE Committee debate:

“Predictive techniques to fight crime also have a huge risk of discrimination, as well as lack of evidence about how accurate they actually are. We’re undermining the basis of our democracy, the presumption of innocence.”

Birgit Sippel, Member of the European Parliament (S&D, DE) and member of the Civil Liberties, Justice and Home Affairs Committee, said:

“It is crucial to acknowledge that elements of structural injustice are intensified by AI systems and we must therefore ban the use of predictive systems in law enforcement and criminal justice once and for all. Any AI or automated systems that are deployed by law enforcement and criminal justice authorities to make behavioural predictions on individuals or groups to identify areas and people likely to commit a crime based on historical data, past behaviour or an affiliation to a particular group will inevitably perpetuate and amplify existing discrimination. This will particularly impact people belonging to certain ethnicities or communities due to bias in AI systems.”

Discrimination, surveillance and infringement of fundamental rights

The need for a prohibition is based on detailed research by Fair Trials into the use and impact of these systems across Europe, with case studies and examples set out in the report Automating Injustice: the use of artificial intelligence and automated decision-making systems in criminal justice in Europe.

Fair Trials’ report demonstrates that law enforcement and criminal justice data used to create, train and operate AI systems is often reflective of historical, systemic, institutional and societal discrimination which result in racialised people, communities and geographic areas being over-policed and disproportionately surveilled, questioned, detained and imprisoned.

Fair Trials’ research found that AI and automated-decision making systems currently used in Europe:

- infringe fundamental rights, including the right to a fair trial, the presumption of innocence and the right to privacy;

- legitimise and exacerbate racial and ethnic profiling and discrimination; and

- result in the repeated targeting, surveillance and over-policing of minoritised ethnic and working-class communities.

Fair Trials has created an ‘example’ predictive tool to allow people to see how these systems use information to profile them and predict criminality.

International prohibitions on predictive policing and criminal ‘prediction’ systems

In Germany, the Federal Constitutional Court declared that automated police surveillance and data analysis software was unconstitutional on the grounds of potential discrimination and the right to informational self-determination.

In the US, a number of cities have implemented bans or prohibitions on predictive policing systems, including New Orleans, Oakland, California, Santa Cruz, California and Pittsburgh, Pennsylvania, among others.

Numerous more police departments have themselves chosen to stop using these systems, mostly on the basis that it didn’t work, including multiple forces in the United States as well as in the UK.

Amendments

Fair Trials urges all MEPs to vote in favour of amendment CA 11 to protect people and their fundamental rights by prohibiting policing and criminal prediction systems.

Fair Trials also supports other amendments to the AI Act which:

- require public transparency of ‘high-risk’ AI systems on a public register;

- give people the right to an explanation of an AI system decision;

- give people meaningful ability to challenge AI systems and obtain effective remedies;

- prohibit remote biometric identification (such as face recognition surveillance)

- ensure that predictive AI systems in migration are considered high risk

- ensure that ‘high-risk’ systems have accessibility requirements for people with disabilities

- widen the definition of AI to cover the full range of AI systems which have been proven to impact fundamental rights.